In today’s long post, I’m going to explain the guidelines we follow at Retibus Software in order to handle Unicode text in Windows programs written in C and C++ with Microsoft Visual Studio. Our approach is based on using the types char and std::string and imposing the rule that text must always be encoded in UTF-8. Any other encodings or character types are only allowed as temporary variables to interact with other libraries, like the Win32 API.

Note that a lot of books on Windows programming recommend using wide characters for internationalised text, but I find that using single bytes encoded as UTF-8 as the internal representation for strings is a much more powerful and elegant approach. The reason for this is that it is easier to use char-based functions in standard C and C++. Developers are usually much more familiar with functions like strcpy in C or the C++ std::string class than with the wide-character equivalents wcscpy and std::wstring, and the support for wide characters is not completely consistent in either standard. For example, the C++ std::exception class only accepts std::string descriptions in its constructor. In addition, using the char and std::string types makes the code much more portable across platforms, as the char type is always, by sheer definition, one byte long, whereas sizeof(wchar_t) can typically be 2 or 4 depending on the platform.

Even if we are developing a Windows-only application, it is good practice to isolate the Windows-dependent parts as much as possible, and using UTF-8-encoded strings is a solid way of providing full Unicode support with a highly portable and readable coding style.

But of course, there are a number of caveats that must be taken into account when we use UTF-8 in C and C++ strings. I have summed up my coding guidelines regarding strings in five rules that I list below. I hope others will find this approach useful. Feel free to use the comments section for any criticism or suggestions.

1. First rule: Use UTF-8 as the internal representation for text.

The original type for characters in the C language is char, which doubles as the type that represents single bytes, the atomic size of memory that can be addressed by a C program, and which is typically made up of eight bits (an ‘octet’). C programmers are used to treating text as an array of char units, and the standard C library offers a set of functions that handle text such as strcmp and strcat, that any self-respecting C programmer should be completely familiar with. The standard C++ library offers a class std::string that encapsulates a C-style char array, and all C++ programmers are familiar with that class. Because using char and std::string is the original and natural way to code text in the C and C++ languages, I think it is better to stick to those types and avoid the wide character types.

Why are wide characters better avoided? Well, the support for wide characters in C and C++ has been somewhat erratic and inconsistent. They were added to both C and C++ at a time when it was thought that 16 bits would be big enough to hold any Unicode character, which is no longer the case. The standard C99 and C++98 specifications include the wchar_t type (as a standard typedef in C and as a built-in type in C++) and text literals preceded by the L operator, such as L”hello”, are treated as an array of wchar_t characters. In order to handle wide characters, standard C functions such as strcpy and strcmp have been replicated in wide-character versions (wcscpy, wcscmp, and so on) that are also part of the standard specifications, and the C++ standard library defines its string class as a template std::basic_string with a parameterised character type, which allows the existence of two specialisations std::string and std::wstring. But the use of wide characters can’t replace the plain old chars completely. In fact, there’s still a number of standard functions and classes that require char-based strings, and using wide characters often depends on compiler-specific extensions like _wfopen. Another serious drawback is that the wchar_t type doesn’t have a fixed size across compilers, as the standard specifications in both C and C++ don’t mandate any size or encoding. This can lead to trouble when serialising and deserialising text across different platforms. In Visual Studio, sizeof(wchar_t) returns 2, which means that it can only store text represented as UTF-16, as Windows does by default. UTF-16 is a variable-width encoding, just like UTF-8. I mentioned in my previous post how there was a time in the past when it was thought that 16 bits would be enough for the whole Unicode character repertoire, but that idea was abandoned a long time ago, and 16-bit characters can only be used to support Unicode when one accepts that there are ‘surrogate pairs’, i.e. characters (in the sense of Unicode code points) that span a couple of 16-bit units in their representation. So, the idea that each wchar_t would be a full Unicode character is not true any more, and even when using wchar_t-based strings we may come across fragments of full characters.

The problem of the variable size of wchar_t units across compilers has been addressed by the forthcoming C and C++ standards (C1X and C++0x), which define the new wide character types char16_t and char32_t. These new types, as is clear from their names, have a fixed size. With the new types, string literals can now come in five (!) flavours:

char plainString[] = “hello”; //Local encoding, whatever that may be. wchar_t wideString[] = L”hello”; //Wide characters, usually UTF-16 or UTF-32. char utf8String[] = u8“hello”; //UTF-8 encoding. char16_t utf16String[] = u”hello”; //UTF-16 encoding. char32_t utf32String[] = U”hello”; //UTF-32 encoding.

So, in C1x and C++0x we now have five different ways of declaring string literals to account for different encodings and four different character types. C++0x also defines the types std::u16string and std::u32string based on the std::basic_string template, just like std::string and std::wstring. However, support for these types is still poor and any code that relies on these types will end up managing lots of encoding conversions when dealing with third-party libraries and legacy code. Because of these limitations of wide character types, I think it is much better to stick to plain old chars as the best text representation in the C and C++ languages.

Note that, contrary to a widespread misconception, it is perfectly possible to use char-based strings for text in C and C++ and fully support Unicode. We simply need to ensure that all text is encoded as UTF-8, rather than a narrow region-dependent set of characters such as the ISO-8859-1 encoding used for some Western European languages. Support of Unicode depends on which encoding we enforce for text, not on whether we choose single bytes or wide characters as our atomic character type.

An apparent drawback of the use of chars and UTF-8 to support Unicode is that there is not a one-to-one correspondence between the characters as the atomic units of the string and the Unicode code points that represent the full characters rendered by fonts. For example, in a UTF-8-encoded string, an accented letter like ‘á’ is made up of two char units, whereas a Chinese character like ‘中’ is made up of three chars. Because of that, a UTF-8-encoded string can be regarded as a glorified array of bytes rather than as a real sequence of characters. However, this is rarely a problem because there are few situations when the boundaries between Unicode code points are relevant at all. When using UTF-8, we don’t need to iterate code point by code point in order to find a particular character or piece of text in a string. This is because of the brilliant UTF-8 guarantee that the binary representation of one character cannot be part of the binary representation of another character. And when we deal with string sizes, we may need to know how many bytes make up the string for allocation purposes, but the size in terms of Unicode code points is quite useless in any realistic scenario. Besides, the concept of what constitutes one individual character is fraught with grey areas. There are many scripts (Arabic and Devanagari, for example) where letters can be merged together in ligature forms and it may be a bit of a moot point whether such ligatures should be considered as one character or a sequence of separate characters. In the few cases where we may need to iterate through Unicode code points, like for example a word wrap algorithm, we can do that through a utility function or class. It is not difficult to write such a class in C++, but I’ll leave that for a future post.

A problem that is more serious is that of Unicode normalisation. Basically, Unicode allows diacritics to be codified on their own, so that ‘á’ may be codified as one character or as ‘a’ + ‘´’. Because of that, a piece of text like ‘árbol’ can be codified in two different ways in valid Unicode. This affects comparison, as comparing for equality byte by byte would fail to take into account such alternative codes for combined characters. The problem is, however, highly theoretical. I have yet to come across a case where I find two equal strings where the diacritics have been codified in different ways. Since we usually read text from files using a unified approach, I can’t think of any real-life scenario where we would need to be aware of a need for normalisation. For some interesting discussions about these issues, see this thread in the Usenet group comp.lang.c++.moderated (I agree with comment 7 by user Brendan) and this question in the MSDN forums (I agree with the comments by user Igor Tandetnik). For the issue of Unicode normalisation, see this part of the Unicode standard.

2. Second rule: In Visual Studio, avoid any non-ASCII characters in source code.

It is always advisable to keep any hard-coded text to a minimum in the source code. We don’t want to have to recompile a program just to translate its user interface or to correct a spelling mistake in a message displayed to the user. So, hard-coded text usually ought to be restricted to simple keywords and tokens and the odd file name or registry key, which shouldn’t need any non-ASCII characters. If, for whatever reason, you want to have some hard-coded non-ASCII text within the source, you have to be aware that there is a very serious limitation: Visual Studio doesn’t support Unicode strings embedded in the source code. By default, Visual Studio saves all the source files in the local encoding specific to your system. I’m using an English version of Visual Studio 2010 Express running on Spanish Windows 7, and all the source files get saved as ISO-8859-1. If I try to write:

const char kChineseSampleText[] = "中文";

Visual Studio will warn me when it attempts to save the file that the current encoding doesn’t support all the characters that appear in the document, and will offer me the possibility of saving it in a different encoding, like UTF-8 or UTF-16 (which Visual Studio, like other Microsoft programs, sloppily calls ‘Unicode’). Even if we save the source file as UTF-8, Visual Studio will save the file with the right characters, but the compiler will issue a warning:

"warning C4566: character represented by universal-character-name '\u4E2D' cannot be represented in the current code page (1252)"

This means that even if we save the file as UTF-8, the Visual Studio compiler still tries to interpret literals as being in the current code page (the narrow character set) of the system. Things will get better with the forthcoming C++0x standard. In the newer standard, only partially supported by current compilers, it will be possible to write:

const char kChineseSampleText[] = u8"中文";

And the u8 prefix specifies that the literal is encoded as UTF-8. Similar prefixes are ‘u’ for UTF-16 text as an array of char16_t characters, and ‘U’ for UTF-32 text as an array of char32_t characters. These prefixes will join the currently available ‘L’ used for wchar_t-based strings. But until this feature becomes available in the major compilers, we’d better ignore it.

Because of these limitations with the use of UTF-8 text in source files, I completely avoid the use of any non-ASCII characters in my source code. This is not difficult for me because I write all the comments in English. Programmers who write the comments in a different language may benefit from saving the files in the UTF-8 encoding, but even that would be a hassle with Visual Studio and not really necessary if the language used for the comments is part of the narrow character set of the system. Unfortunately, there is no way to tell Visual Studio, at least the Express edition, to save all new files with a Unicode encoding, so we would have to use an external editor like Notepad++ in order to resave all our source files as UTF-8 with a BOM, so that Visual Studio would recognise and respect the UTF-8 encoding from that moment on. But it would be a pain to depend on either saving the files using an external editor or on typing some Chinese to force Visual Studio to ask us about the encoding to use, so I prefer to stick to all-ASCII characters in my source code. In that way, the source files are plain ASCII and I don’t have to worry about encoding issues.

Note that on my English version, Visual Studio will warn me about Chinese characters falling outside the narrow character set it defaults to, but it won’t warn me if I write:

const char kSpanishSampleText[] = "cañón";

That’s because ‘cañón’ can be represented in the local code page. But the above line would then be encoded as ISO-8859-1, not UTF-8, so such a hard-coded text would violate the rule to use UTF-8 as the internal representation of text, and care must be taken to avoid such hard-coded literals. Of course, if you use a Chinese-language version of Visual Studio you should swap the examples in my explanation. On a Chinese system, ‘cañón’ would issue the warnings, whereas ‘中文’ would be accepted by the compiler, but would be stored in a Chinese encoding such as GB or Big5.

As long as Microsoft doesn’t add support for the ‘u8’ prefix and better options to enforce UTF-8 as the default encoding for source files, the only way of hard-coding non-ASCII string literals in UTF-8 consists in listing the numeric values for each byte. This is what I do when I want to test internationalisation support and consistency in my use of UTF-8. I typically use the next three lines:

// Chinese characters for "zhongwen" ("Chinese language").

const char kChineseSampleText[] = {-28, -72, -83, -26, -106, -121, 0};

// Arabic "al-arabiyya" ("Arabic").

const char kArabicSampleText[] = {-40, -89, -39, -124, -40, -71, -40, -79, -40, -88, -39, -118, -40, -87, 0};

// Spanish word "canon" with an "n" with "~" on top and an "o" with an acute accent.

const char kSpanishSampleText[] = {99, 97, -61, -79, -61, -77, 110, 0};

Edit 27 July 2014: An alternative and more elegant way of declaring these constants, suggested by imron in a comment below, is const char kChineseSampleText[] = "\xe4\xb8\xad\xe6\x96\x87";

These hard-coded strings are useful for testing. The first one is the Chinese word ‘中文’, the second one, useful to check if right-to-left text is displayed correctly, is the Arabic ‘العربية’, and the third one is the Spanish word ‘cañón’. Note how I have avoided the non-ASCII characters in the comments. Of course in a final application, the UTF-8-encoded text displayed in the GUI should be loaded from resource files, but the hard-coded text above comes in handy for quick tests.

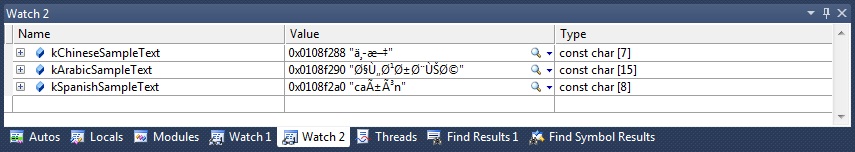

Using UTF-8 as our internal representation for text has another important consequence when using Visual Studio, which is that the text won’t be displayed correctly in the watch windows that show the value of variables while debugging. This is because these debugging windows also assume that the text is encoded in the local narrow character set. This is how the three constant literals above appear on my version of Visual Studio:

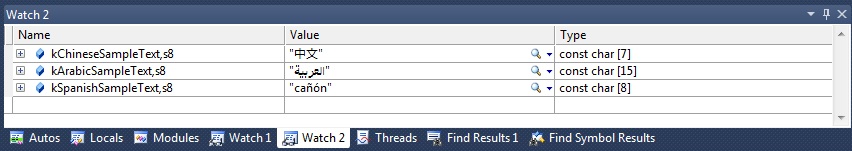

Fortunately, there is a way to display the right values thanks to the ‘s8’ format specifier. If we append ‘,s8’ to the variable names, Visual Studio reparses the text in UTF-8 and renders the text correctly:

The ‘s8’ format specifier works with plain char arrays but not with std::string variables. I reported this last year to Microsoft, but they have apparently deferred fixing it to a future edition of Visual Studio.

3. Third rule: Translate between UTF-8 and UTF-16 when calling Win32 functions.

When programming for Windows we need to understand how the Win32 API functions, and other libraries built on top of that like MFC, handle characters and Unicode. Before Windows NT, previous versions of the operating system like Windows 95 would not support Unicode internally, but just the narrow character set that depended on the system language. So, for example, an English-language version of Windows 95 would support characters in the Western European 1252 code page, which is nearly the same as ISO-8859-1. Because of the assumption that all these regional encodings would fall under some standard or other, these character sets are referred to as ‘ANSI’ (American National Standards Institute) in Microsoft’s dated terminology. At those pre-Unicode times, the functions and structs in the Win32 API would use plain char-based strings as parameters. With the advent of Windows NT, Microsoft made the decision to support Unicode internally, so that the file system would be able to cope with file names using any arbitrary characters rather than a narrow character set varying from region to region. In retrospect, I think Microsoft should have chosen to support UTF-8 encoding and stick with the plain chars, but what they did at the time was split the functions and structs that make use of strings into two alternative varieties, one that uses plain chars and another one that uses wchar_t’s. For example, the old MessageBox function was split into two functions, the so-called ANSI version:

int MessageBoxA(HWND hWnd, const char* lpText, const char* lpCaption, UINT uType);

and the Unicode one:

int MessageBoxW(HWND hWnd, const wchar_t* lpText, const wchar_t* lpCaption, UINT uType);

The reason for these ugly names ending in ‘A’ and ‘W’ is that Microsoft didn’t expect these functions to be used directly, but through a macro:

#ifdef UNICODE #define MessageBox MessageBoxW #else #define MessageBox MessageBoxA #endif // !UNICODE

All the Win32 functions and structs that handle text adhere to this paradigm, where two alternative functions or structs, one ending in ‘A’ and another one ending in ‘W’, are defined and then there is a macro that will get resolved to the ANSI version or the Unicode version depending on whether the ‘UNICODE’ macro has been #defined. Because the ANSI and Unicode varieties use different types, we need a new type that should be typedeffed as either char or wchar_t depending on whether ‘UNICODE’ has been #defined. This conditional type is TCHAR, and it allows us to treat MessageBox as if it were a function with the following signature:

int MessageBox(HWND hWnd, const TCHAR* lpText, const TCHAR* lpCaption, UINT uType);

This is the way Win32 API functions are documented (except that Microsoft uses the ugly typedef LPTSTR for const TCHAR*). Thanks to this syntactic sugar, Microsoft treats the macros as if they were the real functions and the TCHAR type as if it were a distinct character type. The macro TEXT, together with its shorter variant _T, is also #defined in such a way that a literal like TEXT(“hello”) or _T(“hello”) can be assumed to have the const TCHAR* type, and will compile no matter whether UNICODE is #defined or not. This nifty mechanism makes it possible to write code where all characters will be compiled into either plain characters in a narrow character set or wide characters in a Unicode (UTF-16) encoding. The way characters are resolved at compile time simply depends on whether the macro UNICODE has been #defined globally for the whole project. The same mechanism is used for the C run-time library, where functions like strcmp and wcscmp can be replaced by the macro _tcscmp, and the behaviour of such macros depends on whether ‘_UNICODE’ (note the underscore) has been #defined. The ‘UNICODE’ and ‘_UNICODE’ macros can be enabled for the whole project through a checkbox in the project Settings dialog.

Now this way of writing code that can be compiled as either ANSI or Unicode by the switch of a global setting may have made sense at the time when Unicode support was not universal in Windows. But the versions of Windows that are still supported today (XP, Vista and 7) are the most recent releases of Windows NT, and all of them use Unicode internally. Because of that, being able to compile alternative ANSI and Unicode versions doesn’t make any sense nowadays, and for the sake of clarity it is better to avoid the TCHAR type altogether and use an explicit character type when calling Windows functions.

Since we have decided to use chars as our internal representation, it might seem that we can use the ANSI functions, but that would be a mistake because there is no way to make Windows use the UTF-8 encoding. If we have a char-based string encoded as UTF-8 and we pass it to the ANSI version of a Win32 function, the Windows implementation of the function will reinterpret the text according to the narrow character set, and any non-ASCII characters would end up mangled. In fact, because the so-called ANSI versions use the narrow character set, it is impossible to support Unicode using them. For example, it is impossible to create a directory with the name ‘中文-español’ using the function CreateDirectoryA. For such internationalised names you need to use the Unicode version CreateDirectoryW. So, in order to support the full Unicode repertoire of characters we definitely have to use the Unicode W-terminated functions. But because those functions use wide characters and assume a UTF-16 encoding, we will need a way to convert between that encoding and our internal UTF-8 representation. We can achieve that with a couple of utility functions. It is possible to write these conversion functions in a platform-independent way by transforming the binary layout of the strings according to the specification. If we are going to use these functions with Windows code, an alternative and quicker approach is to base these utility functions on the Win32 API functions WideCharToMultiByte and MultiByteToWideChar. In C++, we can write the conversion functions as follows:

std::string ConvertFromUtf16ToUtf8(const std::wstring& wstr)

{

std::string convertedString;

int requiredSize = WideCharToMultiByte(CP_UTF8, 0, wstr.c_str(), -1, 0, 0, 0, 0);

if(requiredSize > 0)

{

std::vector<char> buffer(requiredSize);

WideCharToMultiByte(CP_UTF8, 0, wstr.c_str(), -1, &buffer[0], requiredSize, 0, 0);

convertedString.assign(buffer.begin(), buffer.end() - 1);

}

return convertedString;

}

std::wstring ConvertFromUtf8ToUtf16(const std::string& str)

{

std::wstring convertedString;

int requiredSize = MultiByteToWideChar(CP_UTF8, 0, str.c_str(), -1, 0, 0);

if(requiredSize > 0)

{

std::vector<wchar_t> buffer(requiredSize);

MultiByteToWideChar(CP_UTF8, 0, str.c_str(), -1, &buffer[0], requiredSize);

convertedString.assign(buffer.begin(), buffer.end() - 1);

}

return convertedString;

}

Edit 27 July 2014: In C++11 the above functions can be implemented in a portable way, by defining a variable of type std::wstring_convert <std::codecvt_utf8_utf16 <Utf16Char>, Utf16Char> and calling to_bytes and from_bytes for the UTF-8 to UTF-16 and UTF-16 to UTF-8 conversions, respectively. Visual Studio 2012 supports this.

Armed with these two functions we can now convert our internal UTF-8 strings to wide-character strings and the other way around when we have to call a Windows function that uses wide characters. In this way, our code will only use wide characters through temporary stack variables at the point of interaction with the Win32 API. We can manage to do this in a very clean way by writing wrapper functions for those Win32 functions that use text. For example, in order to read and write the text in a window or control, we can write the following C++ functions:

void SetWindowTextUtf8(HWND hWnd, const std::string& str)

{

std::wstring wstr = ConvertUtf8ToUtf16(str);

SetWindowTextW(hWnd, wstr.c_str());

}

std::string GetWindowTextUtf8(HWND hWnd)

{

std::string str;

int requiredSize = GetWindowTextLength(hWnd) + 1; //We have to take into account the final null character.

if(requiredSize > 0)

{

std::vector<wchar_t> buffer(requiredSize);

GetWindowTextW(hWnd, &buffer[0], requiredSize);

std::wstring wstr(buffer.begin(), buffer.end() - 1);

str = ConvertUtf16ToUtf8(wstr);

}

return str;

}

The two functions above simplify reading and writing text from HWND objects. For one thing, they provide a useful C++ façade that hides the dirty work of dealing with buffer sizes. More importantly, they hide the details of the use of wide characters, so that the client code can assume that the std::strings being read and written are encoded as UTF-8.

4. Fourth rule: Use wide-character versions of standard C and C++ functions that take file paths.

There is a very annoying problem with Microsoft’s implementation of the C and C++ standard libraries. Imagine we store the search path for some file resources as a member variable in one of our classes. Since we always use UTF-8 for our internal representation, this member will be a UTF-8-encoded std::string. Now we may want to open or create a temporary file in this directory adding a file name to the search path and calling the C run-time function fopen. In C++ we would preferably use a std::fstream object, but in either case, we will find that everything works fine if the full path of the file name contains ASCII characters only. The problem may remain unnoticed until we try a path that has an accented letter or a Chinese character. At that moment, the code that uses fopen or std::fstream will fail. The reason for this is that the standard non-wide functions and classes that deal with the file system, such as fopen and std::fstream, assume that the char-based string is encoded using the local system’s code page, and there is no way to make them work with UTF-8 strings. Note that at this moment (2011), Microsoft does not allow to set a UTF-8 locale using the set_locale C function. According to MSDN:

The set of available languages, country/region codes, and code pages includes all those supported by the Win32 NLS API except code pages that require more than two bytes per character, such as UTF-7 and UTF-8. If you provide a code page like UTF-7 or UTF-8, setlocale will fail, returning NULL. The set of language and country/region codes supported by setlocale is listed in Language and Country/Region Strings (source).

This is really unfortunate because the code that uses fopen or std::fstream is standard C++ and we would expect that code to be part of our fully portable code that can be compiled under Windows, Mac or Linux without any changes. However, because of the impossibility to configure Microsoft’s implementation of the standard libaries to use UTF-8 encoding, we have to provide wrappers for these functions and classes, so that the UTF-8 string is converted to a wide UTF-16 string and the functions and classes that use wide-characters such as _wfopen and std::wfstream are used internally instead. Writing a wrapper for std::fstream before Visual C++ 10 was difficult because only the wide-character file stream objects wifstream and wofstream could be constructed with wide characters, but using such objects to extract text was a pain, so they were nearly useless unless supplemented with one’s own code-conversion facets (quite a lot of work). On the other hand, it was impossible to access a file with a name including characters outside the local code page (like “中文.txt” on a Spanish system) using the char-based ifstream and ofstream. Fortunately, the latest version of the C++ compiler has extended the constructors of the standard fstream objects to support wide character types (in line with new additions to the forthcoming C++0x standard; Edit 27 July 2014: this was not correct. As Seth mentioned in a comment below, C++11 hasn’t added wide-character constructors at all), which simplifies the task of writing such wrappers:

namespace utf8

{

#ifdef WIN32

class ifstream : public std::ifstream

{

public:

ifstream() : std::ifstream() {}

explicit ifstream(const char* fileName, std::ios_base::open_mode mode = std::ios_base::in) :

std::ifstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

explicit ifstream(const std::string& fileName, std::ios_base::open_mode mode = std::ios_base::in) :

std::ifstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

void open(const char* fileName, std::ios_base::open_mode mode = std::ios_base::in)

{

std::ifstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

void open(const std::string& fileName, std::ios_base::open_mode mode = std::ios_base::in)

{

std::ifstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

};

class ofstream : public std::ofstream

{

public:

ofstream() : std::ofstream() {}

explicit ofstream(const char* fileName, std::ios_base::open_mode mode = std::ios_base::out) :

std::ofstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

explicit ofstream(const std::string& fileName, std::ios_base::open_mode mode = std::ios_base::out) :

std::ofstream(ConvertFromUtf8ToUtf16(fileName), mode)

{

}

void open(const char* fileName, std::ios_base::open_mode mode = std::ios_base::out)

{

std::ofstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

void open(const std::string& fileName, std::ios_base::open_mode mode = std::ios_base::out)

{

std::ofstream::open(ConvertFromUtf8ToUtf16(fileName), mode);

}

};

#else

typedef std::ifstream ifstream;

typedef std::ofstream ofstream;

#endif

} // namespace utf8

Thanks to this code, with the minimum change of substituting utf8::ifstream and utf8::ofstream for the std versions, we can use UTF-8-encoded file paths to open file streams. In C, a similar wrapper can be written for the fopen function.

5. Fifth rule: Be careful with third-party libraries.

When using third-party libraries, care must be taken to check how these libraries support Unicode. Libraries that follow the same approach as Windows, based on wide characters, will also require encoding conversions every time text crosses the library boundaries. Libraries that use char-based strings may also assume or allow UTF-8 internally, but if a library renders text or accesses the file system we may need to tweak its settings or modify its source code to prevent it from lapsing into the narrow character set.

6. References

In addition to the references listed in the two previous posts on Unicode (Thanks for signing up, Mr. González – Welcome back, Mr. González! and Character encodings and the beauty of UTF-8) and the links within the post, I’ve also found the following discussions and articles interesting:

- Reading UTF-8 with C++ streams. Very good article by Emilio Caravaglia.

- Locale/UTF-8 file path with std::ifstream. Discussion about how to initialise a std::ifstream object with a UTF-8 path.

On rule #2, you can use the following pragma to override the character set visual studio uses to encode string literals (it normally it encodes everything in Windows-1252 or your locale).

#pragma execution_character_set(“utf-8”)

It requires Visual Studio 2010 SP1, and the following hotfix:

http://support.microsoft.com/kb/980263

Thanks, Sean. That’s very useful. I didn’t know there was that pragma directive.

You haven’t made a compelling case IMHO. There are strong counter-arguments to your approach, including the wrapping of countless Windows functions and other things which you spent much of your time describing . There are other issues as well, such as pointer and other issues related to the use of variable-length UTF-8 strings (which UTF-16 is much less susceptible to in practice). Where portability isn’t an issue, which is likely the case for most Windows developers, your arguments are mostly academic. In fact, the amount of overhead you’re adding and increased complexity will result in code bloat, greater maintenance costs, less stability, etc. Most will have little to gain by it, and far more to lose.

The problem with guaranteeing proper Unicode support in C++ and Windows is that no solution is perfect. The approach I describe is the one I feel more comfortable with provided that the parts of the code that interact with Windows-specific calls and with the file system are small relative to the bulk of code that can be written in a platform-independent way. I don’t think the wrappers around Windows calls are difficult to maintain, and the efficiency concerns shouldn’t be important in the normal scenario where functions manipulating text aren’t time-critical.

I would say the weakest (and ugliest) part in my approach is the wrappers around stream classes. That does lead to a maintenance problem since programmers can easily forget about the in-house rule and use a standard stream class directly. But the problem with streams is that there is no way, as far as I know, to support Unicode with standard C++ streams under Windows. Again, my solution may not be good but it is the least bad one I could think of to keep the code portable. Feel free to suggest other approaches.

Finally, I’m not sure what you mean by “there are other issues as well, such as pointer and other issues related to the use of variable length”. What issues do you have in mind?

With MSVC 2010, I believe you could use something like this to convert UTF-8 strings to UTF-16 filenames:

std::string u8name = /* whatever */; // The name.std::wstring_convert<std::codecvt_utf8_utf16, wchar_t> wconverter; // Converter between UTF-8 and UTF-16 wide characters. Windows uses wchar_t for UTF-16.

std::wstring u16name = wconverter.from_bytes(u8name); // Convert name to UTF-16.

std::fstream fileWithU16Name(u16name); // And voila.

I’m not sure if this works with every UTF-8 string, however, and MSVC 2010 doesn’t seem to support doing this with char16_t (even though it provides the charN_t types as typedefs of integral types).

I’m not an expert on Unicode, localisation, or Windows encodings, though, that’s just something I made while messing around with encodings. Funny thing is, I could get filenames working properly by converting them with a “std::wstring_convert<std::codecvt_utf8_utf16<wchar_t>, wchar_t> wconverter”, but I couldn’t actually get UTF-8 strings to output as UTF-16 that way; that’s probably because I honestly had no idea what I was doing there, though, and just wanted to experiment & learn.

[Also, in my above message, it should be “std::wstring_convert<std::codecvt_utf8_utf16<wchar_t>, wchar_t> wconverter”, not “std::wstring_convert<std::codecvt_utf8_utf16, wchar_t> wconverter”. I think something broke when I posted it, even though it was in a <code> block.]

There are very few absolutes in programming. If this technique serves a legitimate purpose for you, by all means use it. It’s certainly not my business to tell you otherwise. If you’re using it simply to follow some theoretical belief that UTF-8 is inherently better however, or even more portable (there are arguments for and against the use of UTF-8 of course), that alone isn’t a legitimate reason to use it IMHO, in particular, if your app is targeting Windows only, and always will. What you’re doing is unnatural in the Windows environment. More than just violating MSFT’s own best-practices (which recommends UTF-16 for Windows apps of course), I don’t see what it buys you. You’re just creating an environment that leads to the problems I previously mentioned, and you get very little in return for it. If you’re dealing with strings that contain characters outside the BMP (Basic Multilingual Plane), that would be very rare in most applications, and UTF-16 will still serve your needs perfectly well without having to write all those extraneous wrappers (except for those rare cases which fall outside the BMP, which can be handled on a case-by-case basis). Moreover, since UTF-8 strings are variable length, you have to jump through hoops to perform operations that traditionally assume constant-length strings (what I was alluding to in my first post). Subscripting into a string for instance, or incrementing a pointer through a string is no longer a trivial matter, since each Unicode character beyond 7F now consumes more than one byte. Even taking a simple “strlen()” won’t work in a WYSIWYG fashion (when dealing with legacy C-style code). Ok, you can work your away around this with non-standard API functions, non-traditional C/C++ functions (where available), your own functions, or other techniques, but again, what does it buy you in the end. If your app is running under Windows only, then you don’t need to worry about other platforms so why go to all this trouble. Typically you would deal with UTF-8 only when doing things that actually need to work in other environments (sometimes Windows itself). If you’re writing to a file for instance, write it as UTF-8 if required (include a BOM if necessary, though not really for UTF-8), but that doesn’t mean writing your entire Windows app in UTF-8. It’s not only unnecessary if you’re running under Windows only, but largely self-defeating for the reasons I mentioned in my first post.

BTW, while I’ve never looked into it myself (there’s rarely a need to do so in the real world), I’m wondering if it’s possible to handle your situation using code pages. I doubt it off-hand, but since MSFT provides code page 65001 as sort of a pseudo code page (not a real code page using the traditional definition), it may be possible to set the active code page to this value and all ANSI WinAPI functions (those ending with “A”) would automatically handle UTF-8. Assuming it is possible (probably full of caveats even if it is), it would probably be practical in the Windows world only, though some other environments also support UTF-8 using their own non-standard code pages. UTF-16 would therefore still normally be the preferred choice.

Thanks for your reply, John. Actually, it’s nice to find someone out there reading what I write. 🙂

What the technique buys me is easier portability. For example, if I use UTF-16 file names I would have to ban the use of fstream objects completely because you can’t use them with UTF-16 strings in standard C++. Then all file opening operations will depend on a platform-specific API. That’s what I’m trying to avoid. I find it simpler to use std::fstream to interact with a file because that part of the code will be completely portable across C++ compilers.

The problem of “jumping through hoops” when iterating through a string is a real pain with certain multibyte encodings, like Chinese Big-5. This is probably the reason that makes a lot of programmers wary of variable-length encodings, but the great thing about UTF-8 is that the problems we would find with say Big-5 aren’t relevant at all. In UTF-8, the code for a character cannot be a part of the code for a bigger character. Thanks to that remarkable design feature of the UTF encodings, if I want to find an ASCII character like ‘/’ in a string, I can simply iterate through the bytes that make up the string until I find a char that matches ‘/’. There’s no chance that the ‘/’ match I find could be a fragment of a larger character. Because of that, I can think of very few realistic scenarios where you would need to iterate through the individual code points. I have written a post about how to write a code-point iterator for the rare cases where you would want that but, frankly, that is a very academic problem. So, if you ask me how I check the length of a string, well I simply use std::string::size() (or strlen in C) to know how big it is in bytes. That size is fine enough for allocation purposes, which is the usual reason why you need to know the size of a string. As an example, does it matter how many Arabic letters there are in an Arabic string? What would you use that information for? The only example I can think of of a code-point count would be a character count feature in a word processing program. I don’t need that at all but, for completeness, I wrote the code-point iterator post I linked to before in order to address that sort of rare situation.

Regarding Windows code pages, as you say the use of 65001 is restricted to a few situations. Unfortunately, you can’t set the locale of a C/C++ program to that encoding, so it is impossible to force C++ streams and C functions like fopen to interpret strings as UTF-8 values. It would be great if it could be done because then the need for the ugly stream wrappers would vanish. A similar technique to make the Win32 ANSI functions work with UTF-8 encoding would be great too, but there’s no way to do that either.

You seem to be under the impression that UTF-16 is a fixed size encoding. That’s incorrect. UNICODE is inherently 32 bits.

In fact, UTF-16 has all the disadvantages of UTF-8 (namely: variable size) and UTF-32 (namely: it’s backwards incompatible, it’s sensible to machine byte order and wastes memory in most cases).

So it seems that yes, UTF-8 is superior to any other UNICODE encoding scheme in use, even if it’s variable-length.

“UTF-8 is superior to any other UNICODE encoding scheme in use”

UTF-8 is inferior to GB18030 since GB18030 is the only UNICODE encoding scheme allowed in Chinese software. The Chinese submitted GB18030 to the UNICODE consortium as way for all the other Chinese character encodings (Big Five, GB2312, etc) to be easily inter-converted.

The good news is that GB18030 is very similar to UTF-8 (including the first 128 characters that your source code is typed in) and therefore there is no sound logical reason to not use GB18030 unless you are a racist (although I believe racism is the exact reason why you can’t run a Chinese program — menu items and all — in a typical English version of Windows).

So using UTF-8 is racist?! That’s a pretty strong (and absurd) assertion. Your argument that GB18030 is superior rests solely on the fact that it has been mandated as official in mainland China, but there are no technical reasons, as far as I can see, that make it better than UTF-8. From the Wikipedia article: “GB 18030 inherits the bad aspects of GBK, most notably needing special code to safely find ASCII characters in a GB18030 sequence”. This means that, in C++, given a std::string variable s with some XML text you cannot do “auto nextTagIterator = s.find(‘<');" as the '<' character you find might actually be... a part of a larger character! So, all the nasty problems of multibyte encodings that UTF-8 has solved are back when using GB 18030. And when you say that "you can't run a Chinese program in a typical English version of Windows", well that is only the case when the source code uses the "ANSI" functions like MessageBoxA and assumes a locale that is different from that of the host machine, which is what Microsoft have been telling people not to do since the times of Windows NT 4.0. True, this is something that happens in a lot of software developed in China, but it is the programmers' fault, not the OS's, which has clear rules for internationalisation. Any source code that uses the wchar_t-based functions like MessageBoxW will display text correctly on any version of Windows.

Ok, I understand your points. I’m not saying some of them don’t have merit, though some are weaker than others. For instance, I wouldn’t play down the need to deal with individual characters in a string. There are countless situations where people need to search/parse/manipulate strings based on individual characters and/or substrings, not bytes. They’re far more likely to screw this up if everything is UTF-8. This is immaterial to my point however. The main issue is whether someone really needs to be doing this or not. For most it’s the latter (not). Wrapping everything in UTF-8 will simply make lifer harder for most Windows developers. A program whose shelf life runs for many years, can easily wind up calling hundreds or more of native API functions. If you’re going to wrap everyone in a UTF-8 function, and provide similar wrappers for all sorts of standard C++ classes (granted there would be fewer of these than WinAPI functions), you’re adding a significant amount of overhead and complexity to your program. It will take much greater knowledge for developers to understand how it all works (new employees even more so), will be much more difficult to support in general, more prone to errors and bugs, more expensive to maintain, etc. Programmers are already challenged enough as it is (most are mediocre at best, and that’s being kind), so the skill required to deal with all this isn’t widely available. Good C++ programmers are even harder to come by and getting worse with each passing year, since C++ is in decline on Windows platforms. The talent pool just isn’t there in most organizations. How many programmers can really cope with the amount of knowledge required to program in this type of environment (and have the discipline), and are therefore much more likely to screw things up even more than many already do (without having to become UTF-8 experts). Even experienced programmers will be more susceptible to errors. Unless someone has a very strong need to be working with UTF-8 all the time (you might be an exception but most aren’t), it’s much easier and safer to just stick with UTF-16 in Windows, and avoid all these potential problems.

But the argument about programmers’ skills works both ways. My view is that by using UTF-8 you can tell programmers to treat text as if it were ASCII, using the well-documented techniques with chars and std::strings, so that they may ignore encoding issues. So, rather than expecting any programmers I may hire to be experts in Unicode, I’d simply expect them to be able to use standard C++ techniques. Only those who work on the user interface or on the parts that interact with the file system need to be knowledgeable about the in-house wrappers. Those testing the system should check that names like “中文-español-عربية” work fine when used as identifiers and file and directory names. If a part of the system doesn’t cope well with such a name, it’s probably due to a missing wrapper, which the specialist in encoding issues can fix quickly. You shouldn’t need the whole team to be experts on encoding.

How burdensome this technique is probably depends on how large the user-interface part of the application is. I’ve used this technique at the previous company where I was working and the results were good. The application we were working on was mostly 3D graphics and the user interface was a relatively isolated part of the program, so enforcing these rules was not difficult. Anyway, I agree that in those code bases where most files start with “#include <windows.h>” and Windows calls are littered all over the place, it is easier to use std::wstrings with the UTF-16 encoding that Windows uses.

I do respect your view but strongly maintain that unless someone needs to be doing this for legitimate portability or other possible reasons (don’t know if that applies to you), it’s an academic exercise only. If someone is targeting Windows only for instance, it’s usually self-defeating. Nothing is gained by this approach except the claim that “all my strings are UTF-8 compliant”. “So what” I would say (respectfully). You have to ask yourself, “how do I benefit from this compared to using native UTF-16”. If you don’t benefit which will normally be the case (for most), then what’s the point of this exercise. It just leads to all the problems I mentioned and you’re getting nothing in return. Even if you are getting something, you still have to ask yourself “does it outweigh the drawbacks of using this approach”. The answer for most will still usually be “no”. If it’s “yes” then you’re in a rare position.

“How do I benefit from this compared to using native UTF-16?”

1. As I’ve said, I can use standard C++ (with the admittedly ugly addition of those file stream wrappers) in order to interact with files. That’s the killer reason for me. I haven’t seen any nice and portable solution to use UTF-16 file names with file streams (but if anyone has a nice solution, I’d love to know). There are a few other C++ idioms that aren’t possible with wide strings. For example, writing an exception class that inherits from std::runtime_error and forwards a piece of text to the standard exception on construction requires a char-based string. On the other hand, there is nothing you can do with a std::wstring in C++ that you can’t do with a std::string.

2. For serialisation purposes, using UTF-8 text internally makes it possible to send data through the network without requiring any translation of the binary layout. We don’t have to worry about endianness or the different values of sizeof(wchar_t) across platforms.

Those are the pros I see. As for the cons, the only one I see is the need for a facade to interact with Windows functions and other APIs that use wide characters (you also seem to think that there will be problems when dealing with text at the byte level, but you haven’t provided any example). It seems that you think that this burden outweighs the advantages I see, but that depends on the nature of the project (basically, on how big the Windows-dependent part is, and how important portability to other platforms is). And don’t forget that there’s also a lot of third-party libraries that treat all text as char* parameters, so if you use UTF-16, you will have to convert text into UTF-8 (to preserve characters outside the local code page), or modify the source code, when interfacing with such libraries.

Unfortunately, there’s no free lunch when it comes to Unicode compliance in C++, and all approaches have their own pitfalls.

I don’t know the requirements of your specific app so I’m only talking generally about most Windows apps. If your app is in fact typical (it may not be) , then you’ve simply cited a few specific instances where you may require UTF-8, but haven’t justified why an entire application needs to move data around this way. In my (very long) experience, it would usually be a big mistake. There are usually very few occassions where any type of character conversion is required, and when you do need it, you simply handle it on a case-by-case basis. Normally (usually), that’s only required when connecting to the outside world, or in some cases, when working with the few WinAPI functions that aren’t TCHAR-based (so you may have to convert from “char” to “wchar_t” or vice versa ). How many points of contact are there with the outide world in most applications? Usually very few. If you need to write to a file, and it has to be UTF-8 for whatever reason, then write your data out that way but only when required (it may not always be). If you need to send data across a network, and UTF-8 better meets your needs, then stream it out that way (but again, only if it needs to be). In all cases however, you simply need to call “WideCharToMultiByte()” or “MultiByteToWideChar()” at the time this occurs, and of course there are a whole variety of techniques for wrapping these calls (including the ATL string conversion classes). You can also write some nice UTF-8 based classes/functions if you want. They should only be called however when they *are* needed, and there are normally very few instances of this. It serves no purpose for instance to handle all strings in your application as UTF-8 if they’re only required under limited circumstances. Why would you create a “SetWindowTextUtf8()” for instance. Where is the string for this function coming from. In most applications it originates from the app’s resources, where UTF-16 is the norm, assuming another source like a DB isn’t used (not usually). Where is the UTF-8 string coming from in your case. If you’ve explicitly converted your UTF-16 strings to UTF-8 only to later convert them back to UTF-16, then this is a huge waste of time and energy. You wouldn’t need to do this at all if UTF-16 was your native type. Working with this is normally much easier – just convert to UTF-8 when needed:

typedef std::basic_string tstring;

Please understand, that I do admire your goal, which is to make UTF-8 your native type and only work with UTF-16 when interacting with Windows functions (cleanly separating the two), but for most Windows applications that approach is backwards. Everything should normally be native UTF-16 and then convert to UTF-8 only when needed. It eliminates all the extra work you’ve created for yourself, and all the problems that come with it. You seem to be playing those problems down but I know from vast experience that it would be a serious problem in most shops.

BTW, the situation with the native exception classes is another story altogether, but only because they work natively with “char” as you pointed out. Some might consider this a “mistake” in the standard itself (why not make them template-based) but I’ve seen arguments to the contrary (long story). In any case, the topic of exception encoding is another story (since it gets into deeper issues not worth discussing here).

Sorry, above should be:

typedef std::basic_string<TCHAR> tstring;

And others as required:

typedef std::basic_istringstream<TCHAR> tistringstream;

typedef std::basic_ostringstream<TCHAR> tostringstream;

typedef std::basic_stringstream<TCHAR> tstringstream;

etc.

Hmmm, your blog is stripping out the template arguments I posted in the above typedefs (template argument TCHAR).

I’ve corrected the template declarations in your message. It seems that you need to type < and > to get < and >. I’ve also used such templates in the past, but I’m not very keen on the TCHAR type (I mention that in the article) these days, since I don’t expect to have to compile an “ANSI” version of the application. That’s why I prefer to use straight wchar_t and std::wstring for wide characters.

Anyway, we both agree that the internal representation of strings should be consistent. If you choose an internal UTF-16-encoded std::wstring representation, then you would need conversions when interacting with those parts of the outside world (like third-party libraries or network protocols) that require UTF-8, and in the approach I describe, it is the other way around. I think the difference in approach hinges on what we regard as “the outside world”. In multi-platform projects, it makes sense to think about Windows and Linux (or Mac or whatever) as different kinds of “outside world” that the core modules can be plugged into. This was the situation at the company where I was working before (GeoVirtual, in Barcelona, which closed down a couple of years ago), where our code consisted of a large number of modules that was mostly mathematical and graphical stuff (for geographic data, with an OpenGL viewport for rendering) and mostly platform independent. We basically wanted our core code to build under both Windows and Linux. We were using quite a lot of third-party libraries and had some facade classes for platform-dependent things. In that way, calls to Windows were mostly isolated through those facade classes. We actually had several different applications with different styles of GUI built on the same core of libraries. For a long time, the code was not able to handle Unicode because of the use of many char-based functions where the local code page was assumed. We tried to turn all the std::strings into std::wstrings, but didn’t manage to adapt a lot of the code using file streams. Eventually, I decided to revert the std::wstring changes and use std::strings and assume UTF-8 internally. We had to send data through the network and we interacted with the input data through the network and through configuration XML files that were UTF-8-encoded. In such a scenario, complicating the facade classes to interact with Windows (and with the wxWidgets GUI part, if I remember correctly) with the additional character conversions turned out to be simpler and more effective. All the parts reading data form the XML files and from the network were based on essentially the same code in Windows and Linux, and the code interacting with the mathematical and graphical third-party libraries didn’t require many changes (just adding the UTF-8 wrappers for file streams and fopen, which you would also need if you were using UTF-16 internally). I wrote this post with my experience during that time in mind. I agree that in a project where everything is Windows it may make more sense to use std::wstrings, but don’t underestimate the benefits of aiming at portability, even when it is not an immediate goal.

Hi Angel (oops.. accent missing ),

first of all congratulations for the in-deep and comprehensive explanation.

I find myself in a very similar situation than you were required to deal with, i.e. write string supporting code in a c++, mostly STL based, cross-platform OS abstraction library. Primary platform/compiler are Windows/VS2008, but Windows CE/Android/Linux/iOS also claim support (in this priority order).

After much internet reading, I am tempted to follow your guidelines, possibly writing a UTF-8 based string class, wrapping std::string.

My main concern in the _WIN32 world (and any other where text is not by default UTF8), and that’s where I ask you advice – I guess you already smashed your nose against this wall – is how I detect a string falling down to my library is either locale or UTF8 encoded. In other words how do I construct my UTF8-aware string class from a mix of UTF8 and locale representations a 3rd-party app interfacing with our library might use.

Thanks for reading this

Best

Note: My original reply, like some of the posts in this thread, got accidentally deleted while removing spam messages a few months ago. I have manually restored the affected messages but I haven’t been able to recover my replies. As far as I can remember the gist of my reply to Julius6 was more or less as follows:

There’s no completely safe way of guessing what encoding a piece of text has. Note that a pure ASCII string made up of plain Latin letters is valid in a variety of encodings, so it is only when there are extended characters that one may guess based on probability and particular rules of the encodings. For example, UTF-8 has some consistency rules that other encodings won’t follow, so if the piece of text is not valid UTF-8 it must be in some other encoding.

Hi Angel,

thanks for the suggestions, they make a lot of sense.

Targeting Windows, I see your point of offering the accessing application the two options, either UTF-16 or UTF-8, but for cases where the client still wants to use locale-encoded, char based strings…would you consider this approach safe?

1) Detect whether a string is valid UTF-8.

My understanding is UTF-8 and locale are mutually exclusive, therefore encoding detection is possible. Characters below < 127 are not an issue because the locale and UTF-8 are the same, while remaining characters must be a multi-byte sequence in UTF8, while they are single byte in the current locale.

2) When detection (AssertUtf8Encoding) fails, assume the string is encoded in the current system code page. Convert it to UTF-8:

MultiByteToWideChar(CP_ACP,..);

WideCharToMultiByte(CP_UTF8,…);

// construct Utf8String class here

Thanks again

Best

Note: My original reply, like some of the posts in this thread, got accidentally deleted while removing spam messages a few months ago. I have manually restored the affected messages but I haven’t been able to recover my replies. As far as I can remember the gist of my reply to Julius6 was more or less as follows:

Yes, while that approach is not guaranteed to work in general, it will be fine in most cases, since extended characters in encodings like ISO-8859-1 have binary representations that are invalid as UTF-8.

I’ve never commented on a blog before, but I really must thank you for this!

I am in the process of developing a multi platform API in which I allow UTF-8 input/output or codepage input/output (char *) based on InputEncoding(enum encoding) and OutputEncoding(enum encoding). I do the conversion immediately upon input and immediately before output and everything in MY code is done using UTF-8 internally. I use International Components For Unicode for codepage conversion and to uppercase all strings. I chose ICU a while ago, should I find an alternative to ICU that uses UTF-8 internally?

I am developing a web service around this API. This is fine, the .NET wrappers that I generated using SWIG required a bit of modification, but now the .NET UTF-16 is marshalled nicely to my C++ library and everything works well. We also generate wrappers for Java, perl, python, php and ruby using SWIG (I think the char * in/outs will work for most of these – I will be doing a bit of research on that soon). But at some point people will actually be using the C++ api DIRECTLY and what will THEY expect? I was thinking about adding the wchar_t inputs/outputs as you mention (and maybe even following the Microsoft convention of using the #DEFINES to automatically choose the correct one). But what happens then in LINUX (all the other products we make are multi platform, but this is the first to require Unicode)? What do LINUX and UNIX API users expect when they deal with Unicode? Will they feel comfortable using the UTF-8 functions? What happens if they try to use the wchar_t functions? Should the wchar_t functions be removed from the *NIX APIs.

Thanks!!!

Note: My original reply, like some of the posts in this thread, got accidentally deleted while removing spam messages a few months ago. I have manually restored the affected messages but I haven’t been able to recover my replies. As far as I can remember the gist of my reply to ed was more or less as follows:

I would use char* and UTF-8 in the general multi-platform public functions of the API, and add (UTF-16-encoded) wchar_t overloads only for Windows. In that way, Windows developers with a code base that relies on TCHAR/wchar_t types and UTF-16 would also be able to use the API easily, sparing them the need for encoding conversions. I wouldn’t make the wchar_t overloads available in non-Windows platforms, though. The size of the wchar_t type depends on the compiler and you could end up with a situation where the same code would be using UTF-16 when compiled with Visual Studio under Windows, and UTF-32 with other compilers. Such a scenario would lead to problems as soon as any text is serialised for file storage or network communication.

For character literals, I prefer to use:

const char kChineseSampleText[] = "\xe4\xb8\xad\xe6\x96\x87";

This has a number of advantages over the approach you mention, namely:

1) You don’t have to worry about forgetting to null-terminate

2) It’s easier to input, because you can just use raw hex rather than needing to convert to negative values, and

3) It’s easier to grep for this as a string literal, and syntax highlighters also colour it as a string literal in your IDE etc.

Thanks, imron. That’s a very elegant solution for character literals.

In rule #4 you mention that C++11 is adding wchar_t constructors to basic_fstream:

“Fortunately, the latest version of the C++ compiler has extended the constructors of the standard fstream objects to support wide character types (in line with new additions to the forthcoming C++0x standard).”

Adding wchar_t constructors to fstream is purely a Microsoft extension and C++11 does not do this.

It’s very puzzling why Microsoft thinks this extension is necessary since other platforms, including those that use UTF-16 for the underlying storage of file names, manage perfectly well to enable standard C functions like fopen to open all files.

Note: My original reply, like some of the posts in this thread, got accidentally deleted while removing spam messages a few months ago. I have manually restored the affected messages but I haven’t been able to recover my replies. As far as I can remember, my orginal reply to Seth was more or less as follows:

I stand corrected. Thanks for the information.

Thanks for the article. We have been using UTF-8 internally (in a highly internationalized program) for a couple years now, but there’s still one little trick I can’t figure out. I know about the format specifiers for the watch window, but is there any way I can tell Visual Studio to render char pointers in UTF-8 in the tooltips? I don’t always want to have to type ‘somestr,s8’ in the watch window just to see what the string is in UTF-8.

Note: My original reply, like some of the posts in this thread, got accidentally deleted while removing spam messages a few months ago. I have manually restored the affected messages but I haven’t been able to recover my replies. As far as I can remember the gist of my reply to Andrew was more or less as follows:

Hi Andrew. That doesn’t seem to be possible as far as I know.

Thanks for this article (I know it’s a little old now). For all those comments calling this an “academic exercise”, know that there are some of us who *do* have to worry about portability and supporting multiple platforms. Coming from the java world, where character encoding conversion is extremely simple, this is the best article I’ve run across for dealing with C/C++ (and especially Windows) character encoding.

Pingback: C++中,解析UTF-8字符的位置。 | 四号程序员

Hi,

Great article and very helpful. I do have one comment / question about using UTF-8 as a general solution. What happens when a UTF-8 string contains U+0000? Won’t U+0000 be encoded as 0x00 or \x00? What prevents any of the standard C/C++ functions and APIs from interpreting 0x00 as a trailing null even though it was just meant to be value in the middle of a string?

Do you use “modified UTF-8” where U+0000 is stored as two bytes?

Thank you

Well, I’m not sure what the problem is, so I can’t see why we would need some sort of “modified UTF-8.” In UTF-8 a null byte can never be part of a multibyte representation. It can only be the representation for the null or NUL code point, which is typically used to signal where the string comes to an end. In normal use you would never have null “in the middle of a string.” If the situation you have in mind is a stream of UTF-8 data which could actually contain a sequence of several null-terminated strings, then you would store that as a list of bytes (std::vector<char>) and the length would be explicit in the container. Maybe there’s something I’m missing, but I fail to see why the null character should be problematic.

Brilliant article. I’m in the process of writing a cross platform application (Win/Mac) and this explanation was really what I needed to iron out all encoding issues. Big thumbs up!

Good article. I adopted the same policy years ago but for mbcs instead of utf8 where locale worked successfully.

How would you handl strcoll (string collation) with the asumption that the default locale version will not work ?

Would you just convert to UTF16, then collate using wide chars ?

Similarly, to convert to upper/lower (for European alpha characters with accents etc), would you convert to UTF16, do the case conversion, then convert back to UTF8 ?

If so, both these methods would seem to carry a sizeable performance overhead.

Thanks for your message, Andy, and apologies for the late reply. You raise a very good point. Collation, if you need it, is certainly a problem because of the complete lack of support for UTF-8 locales by Microsoft. I’ve just checked with Visual Studio 2012 that if you try to do a locale construction call “std::locale(“es_ES.UTF-8″)”, you get a runtime_error with the description “bad locale name”. It’s still as bad as it used to be.

Anyway, the situation where you would need proper alphabetical sorting usually involves the user interface (for example, displaying entries in a Win32 List control), so you would be within the use cases where you need to convert the strings to UTF-16 in any case. In many other cases (like, say, internal std::maps or std::sets used for quick look-up), culture-specific collation is usually not needed. In the few cases where you need collation and the user interface is not involved (like, say, writing an alphabetically ordered list of names to a UTF-8-encoded file), I’m afraid the easiest way of doing it would involve converting back and forth between UTF-8 and UTF-16. I’ve never had to worry about collation in any application I’ve worked on, so I haven’t given the problem much thought, but I think the way I would do it would be to write a comparison class that takes a Windows valid locale name like “es-ES” or “de-DE” on construction and then in the comparison method convert the strings to UTF-16 and let Windows compare them using the specified locale. By implementing the comparison class in the appropriate way, you could then use it with standard containers and algorithms like std::set and std::sort every time you need your Álvarezes and Müllers to be properly sorted. It wouldn’t be terribly efficient, but then the situations that involve sorting names aren’t usually time-critical at all.

And for conversions between lowercase and uppercase you would need to do the same. But in this case, it’s more difficult to come up with realistic use cases. Whereas you may occasionally need case-insensitive comparison for things like identifiers and tags (like the encoding of an XML file, which can come as “UTF-8” or as “utf-8”), those cases typically involve plain ASCII only. Generalising case-insensitive comparisons to non-ASCII characters is fraught with problems. To begin with, the case distinction is only applicable to European scripts and even within the scope of such scripts there may not even be a one-to-one mapping between lowercase and uppercase (think of the German ß or the accented letters in some languages). Because of those difficulties I have always preferred to avoid case-insensitive comparison for non-ASCII characters.

I’m on the other end of the pipe – Unix code that has to deal with what naive Windows programs send me. It is ugly. Unfortunately our Windows-Multilingual applications are being taken up by users in inconvenient locales and we’re having to patch at the server to deal with users who can’t/won’t update their applications.

One issue we have is that case conversion, and consequently case insensitive operations, are locale-specific. The trivial example is “the Turkish I problem”, where the same glyph is upper or lower case depending on the locale. So you can say “case insensitive Turkish collation” but you *must* include the locale for the statement to have meaning. We have some users with that locale.

We also have users who regularly mix Arabic or Hebrew and English words in single strings. Thankfully we can 99% convert them to UTF-8 and pass them round as “lumps of bytes”, because those parts of the Windows app (almost always) convert to UTF-8 before sending across the internet. But the other 1%… looking at a string and trying to decide how it’s been mangled by non-conversion or mis-conversion, then unmangling it, takes a lot of time.

Thank you for your blog post.

Your thoughts align very well with the UTF-8 Everywhere Manifesto.

Thanks for the link, Anders. I didn’t know that manifesto. Needless to say… I fully agree with it!

OK, so I’m on VS 2008, and I want to take this approach. Your #4 warns against CRT’s before VS2010, but hints at an approach that makes it possible. Do my question: where do I find information on what I need to do, and how? Or maybe there is an example somewhere I can look at? Or should I just use the narrow char versions of file i/o functions until we move to a more recent version of VS? I mean, I have a lot of work in front of me moving the code base from MBCS to Unicode builds, so much that it can’t be done in one fell swoop; so I need to take a staged approach anyway. If I just start moving all char* and CString to utf-8 strings in std::string objects, using TCHAR etc where necessary to keep both build types until everything is converted, and ignore fopen/fstream etc for now; then I could just fix those things at some later point in time (I guess I could even mark them with a special Doxygen tag so that I can easily find them later on…)

Just thinking out loud, I’d appreciate any help or suggestions you could give me. It seems that I’m stuck between a rock and a hard place when it comes to providing a proper Unicode-enabled cross-platform C++ library, I start to understand why every library out there provides its own string type – much to my chagrin…

Hi, Roel. As you mention, in Visual Studio 2008 it was not possible to access general Unicode filenames with the ofstream/ifstream classes. You would need to use wifstream/wofstream but it’s a bit tricky because those classes, besides being non-standard, will force you to read and write contents using wchar_t’s. If you look into the wifstream/wofstream code, there might be a way of converting an open wi/ofstream into a regular standard i/ofstream but I have never tried to do that, and so I can’t really tell you if such an approach would be feasible. If you can find a way to convert wchar_t-based streams into char-based streams, then you could re-write the suggested utf8::i/ofstream wrappers by first opening the wide streams and then accessing the corresponding char-based streams. A second possibility would be to modify the header file that comes with Visual Studio 2008 and add wide-character constructors to the classes. I think you would have to provide similar constructors for the basic_filebuf and basic_streambuf classes too. One of those ends up calling a function called _Fiopen, for which you would have to write a wide-character overloaded version (you can copy it from ‘VC\crt\src\fiopen.cpp’ in a recent Visual Studio version). Of course tampering with standard header files in this way can lead to all sorts of maintenance problems and you can’t do this if your code is a library intended for others to use.

A third possibility would be to simply wait until you upgrade to a more recent version of Visual Studio and accept that the software doesn’t fully support Unicode filenames for the time being. You could still try to support the non-ASCII characters that are in the local non-Unicode code page (i.e. a name like ‘cañón-中文.txt’ wouldn’t work, but ‘cañón.txt’ would work in Latin1 versions of Windows and ‘中文.txt’ would work in Chinese versions of Windows). Such restricted support for extended characters usually covers most real use cases and may be a valid compromise solution until upgrading Visual Studio to a more recent version. This approach can be implemented with a modified version of the utf8::i/osfstream wrappers as follows:

namespace utf8 { class ifstream : public std::ifstream { public: ifstream() : std::ifstream() {} explicit ifstream(const char* fileName, std::ios_base::open_mode mode = std::ios_base::in) : #if _MSC_VER < 1600 std::ifstream(ConvertFromUtf8ToLocalCodePage(fileName).c_str(), mode) #else std::ifstream(ConvertFromUtf8ToUtf16(fileName), mode) #endif {} (...) // Additional constructor and open methods implemented in a similar way go here. }; class ofstream : public std::ofstream { (...) // Similar implementation to ifstream above. }; }You would need a ConvertFromUtf8ToLocalCodePage function that simply converts a std::string in UTF-8 into a std::string that uses the local codepage. Since this is for the Windows build, you can implement such a function by first calling ConvertFromUtf8ToUtf16 (sample code in the post) and then calling the Win32 function WideCharToMultibyte with the CP_ACP CodePage value.

Regarding the first possibility of using the non-standard wifstream and wofstream, I forgot to mention that those wide-character streams assume that the contents of the file are in UTF-16, so when reading from or writing to a UTF-8-encoded file, one needs to do the trick of imbuing the stream with a UTF-8 to UTF-16 conversion facet before carrying out any i/o operation (for the details see, for example, this answer in a Stack Overflow thread: http://stackoverflow.com/a/3950840). So, if you try to implement the utf8::i/ofstream wrappers using this approach you would need to take that into account and copy the codecvt_utf8 code from a more recent version of Visual Studio since VS 2008 doesn’t ship with it.

Come to think of it, rather than trying to convert between different types of streams as I vaguely suggested in my previous reply, in the utf8::i/ofstream wrappers you could probably simply ditch the inheritance from the standard stream classes and re-implement the methods you actually use, such as read, write and the << and >> operators. It’s not a perfect solution and you may end up re-implementing a few methods, but I think you could get full Unicode compliance in that way using VS 2008. You can put that code inside an

#if _MSC_VER < 1600block and leave the more general wrappers in the#elseblock that would be used once you upgrade your Visual Studio.I thought I would jump in here and side with Ángel José Riesgo’s view on using UTF-8, I found reading this thread quite interesting because I have also driven a project for a number of years where I have settled on utf-8 as a base encoding throughout the project, although I may have a different perspective and reason for doing this. But firstly if I may clarify a couple of relevant points…

First up, in my experience most all processing of string data only really requires you to deal with the number of bytes so utf-8 makes this nice and simple. There are isolated examples when one needs to know/process the number of glyphs being represented by the bytes in the string but thats very rare, certainly in the many cases relevant to the sort of applications I work with (granted thats just my perspective)